Bloom

A modern game without bloom is like a... uhm... basically, every modern game has bloom: a simulated effect of bright light "bleeding" into its surroundings. The reason behind the bleeding is that real lenses never perfectly focus on something, so really, everything bleeds into its surroundings a bit. For light rays of normal intensity its not noticeable, but for something like the sun or its reflection, you can clearly see the effect. A physically-accurate model of bloom for a common camera with a circular lens will require simulating something called an "Airy disk": a disk of light and a set of diffracted concentric rings around it. As far as I can tell, the reason this is not implemented is because its a) slow, and b) not distinguishable from a simple Gaussian blur, as the concentric rings are much dimmer than the central bright spot. But even with Gaussian blur there are problems!

The Gaussian blur required for something like bloom has to have a very large radius; while 40 pixels doesn't seem like a lot, that's 40×40 (1600!) texture memory fetches the GPU has to do for every pixel. To help with this, smart people came up with an alternative way to do a large-radius blur: downsampling the image (halving the width and height) and then upsampling and blurring it, and then finally compositing it back on the previous image. I'm not sure how to write this out in text so that it's readable, so here's a diagram:

That's a neat trick! It greately speeds up the post-processing effect and gives better-looking results than just blur (it does have some downsides, but oh well). The issue is with implementing it with my setup. The following section is about my Vulkan implementation details, so you can skip it if you don't want.

I don't want to spend a lot of time writing this, so don't expect much.

Before bloom, my pipeline was a neat and tidy single VkRenderPass with everything separated into subpasses and all the images transferred as input attachments. Since the bloom implementation requires getting the whole texture and downsampling it,

in a compute shader, it needs to be passed in as a sampled texture (and as a storage texture for output). That means that I had to split up the geometry and atmosphere subpass and the final composing (and imgui) subpass into separate renderpasses. Thankfully

it wasn't that hard (maybe even a bit less confusing than dealing with subpass dependencies and implicit layout transitions (dynamic rendering local read please be more available (vulkan 1.4 thank you))). The hard part was dealing with the syncrhonization of

the compute shaders (downsample and upsample). Also turned out to not be that complicated. If only I didn't make dumb mistakes like forgetting to change the referenced image... Also a note about layouts: since my atmosphere rendering required me to

read and write from the same texture (for multiple atmospheres), the image had to be in VK_IMAGE_LAYOUT_GENERAL, and that simplified things for me a bit, since I thought that doing layout transitions

for the bloom transient images might be kinda useless, since they're read and written to very quickly, so I kept them in the general layout. I still need to benchmark it though. Back to the other stuff now.

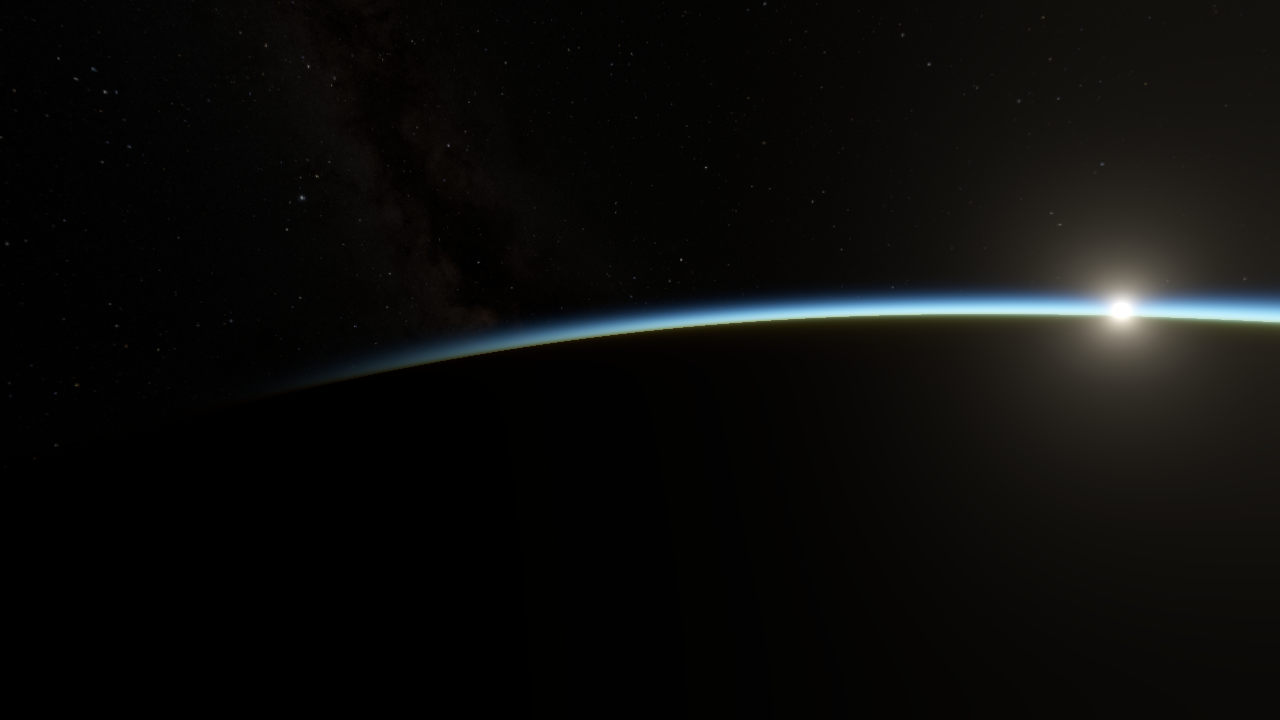

I'm too lazy to write more stuff, so here's a screenshot :-)